Blog Backend and CI/CD

Used some time to adjust and optimizing my CI/CD pipeline to convert my markdown files in Obsidian and by using Hugo to convert, build and redeploy it in Portainer.

Got some inspiration from Networkchuck on how to do this, but I wanted to host this myself in my own docker environment. You can see his post about this here

Please be nice, first time posting something like this on a blog.

What I did differently

I did not want to set a webhook to hostinger like Chuck did, but wanted to deploy it as a docker container on my own portainer platform.

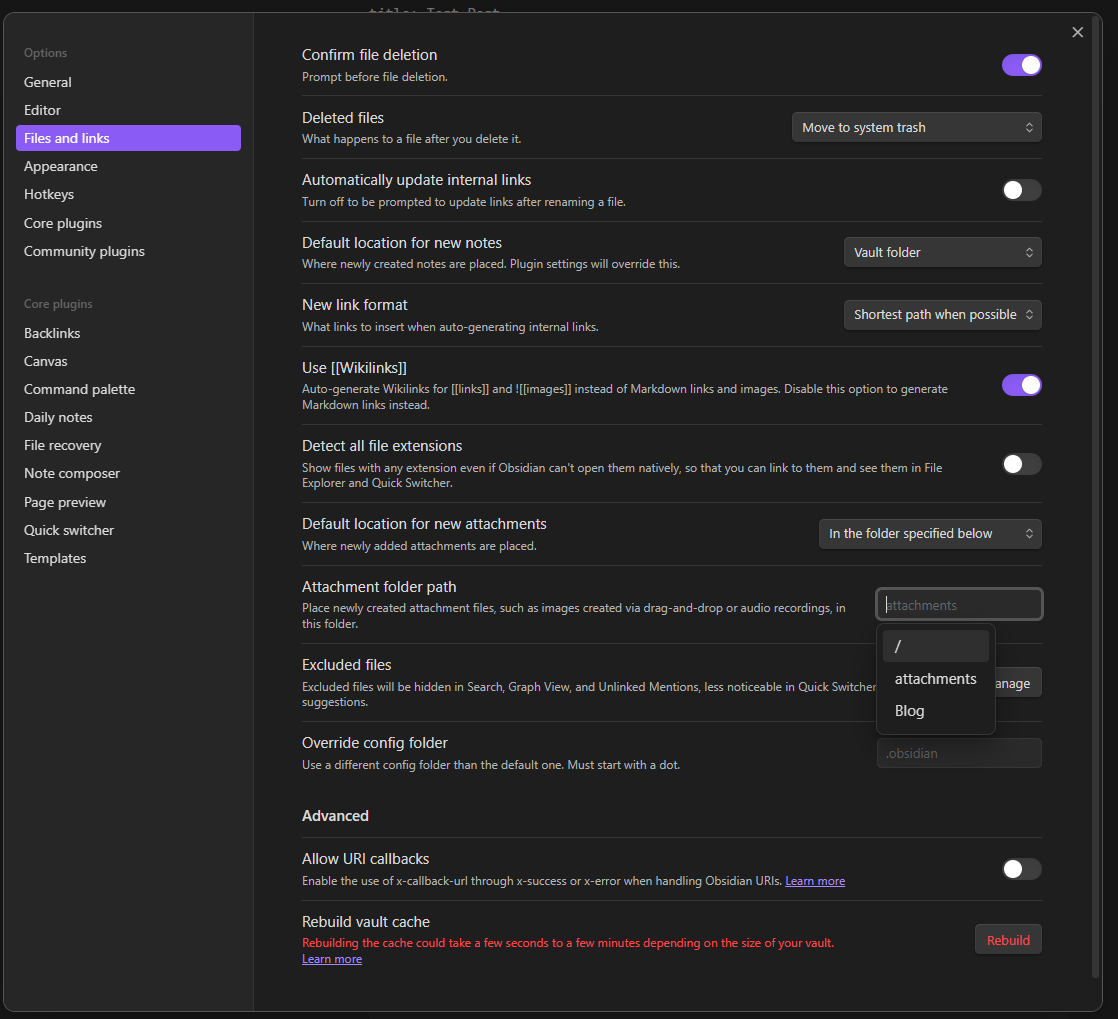

I also found that Chuck did not specify a setting in Obsidian on where to set attachments.

You can do that here:

Megascript update

So my megascript ended up looking like this

# PowerShell Script for Windows

# Set variables for Obsidian to Hugo copy

$sourcePath = "D:\obsidian\Mikael\Blog"

$destinationPath = "D:\Projects\blog\BrainDump\content\posts"

# Set error handling

$ErrorActionPreference = "Stop"

Set-StrictMode -Version Latest

# Change to the script's directory

$ScriptDir = Split-Path -Parent $MyInvocation.MyCommand.Definition

Set-Location $ScriptDir

# Check for required commands

$requiredCommands = @('git', 'hugo')

# Check for Python command (python or python3)

if (Get-Command 'python' -ErrorAction SilentlyContinue) {

$pythonCommand = 'python'

} elseif (Get-Command 'python3' -ErrorAction SilentlyContinue) {

$pythonCommand = 'python3'

} else {

Write-Error "Python is not installed or not in PATH."

exit 1

}

foreach ($cmd in $requiredCommands) {

if (-not (Get-Command $cmd -ErrorAction SilentlyContinue)) {

Write-Error "$cmd is not installed or not in PATH."

exit 1

}

}

# Sync posts from Obsidian to Hugo content folder using Robocopy

Write-Host "Syncing posts from Obsidian..."

if (-not (Test-Path $sourcePath)) {

Write-Error "Source path does not exist: $sourcePath"

exit 1

}

if (-not (Test-Path $destinationPath)) {

Write-Error "Destination path does not exist: $destinationPath"

exit 1

}

# Use Robocopy to mirror the directories

$robocopyOptions = @('/MIR', '/Z', '/W:5', '/R:3')

$robocopyResult = robocopy $sourcePath $destinationPath @robocopyOptions

if ($LASTEXITCODE -ge 8) {

Write-Error "Robocopy failed with exit code $LASTEXITCODE"

exit 1

}

# Process Markdown files with Python script to handle image links

Write-Host "Processing image links in Markdown files..."

if (-not (Test-Path "images.py")) {

Write-Error "Python script images.py not found."

exit 1

}

# Execute the Python script

try {

& $pythonCommand images.py

} catch {

Write-Error "Failed to process image links."

exit 1

}

# Add changes to Git

Write-Host "Staging changes for Git..."

$hasChanges = (git status --porcelain) -ne ""

if (-not $hasChanges) {

Write-Host "No changes to stage."

} else {

git add .

}

# Commit changes with a dynamic message

$commitMessage = "New Blog Post on $(Get-Date -Format 'yyyy-MM-dd HH:mm:ss')"

$hasStagedChanges = (git diff --cached --name-only) -ne ""

if (-not $hasStagedChanges) {

Write-Host "No changes to commit."

} else {

Write-Host "Committing changes..."

git commit -m "$commitMessage"

}

# Push all changes to the main branch

Write-Host "Deploying to GitLab Master..."

try {

git push origin master

} catch {

Write-Error "Failed to push to Master branch."

exit 1

}

Write-Host "All done! Site synced, processed, committed, built, and waiting for deploy by GIT CI/CD."

I did also correct some issues I found with the images.py file that Chuck created.

For me it did add double ! in front of the images while it processed the .md files.

Corrected on following line from

# Prepare the Markdown-compatible link with %20 replacing spaces

markdown_image = f"})"

To:

# Prepare the Markdown-compatible link with %20 replacing spaces

markdown_image = f"[Image Description](/images/{image.replace(' ', '%20')})"

Unsure if this was a mistake from Chuck or if its just hugo and my theme not playing ball. Did find some interesting reading about it here

Gitlab Pipeline

I also did create a gitlab-ci.yml file to tell the pipeline what to do once a commit has been done to the repo.

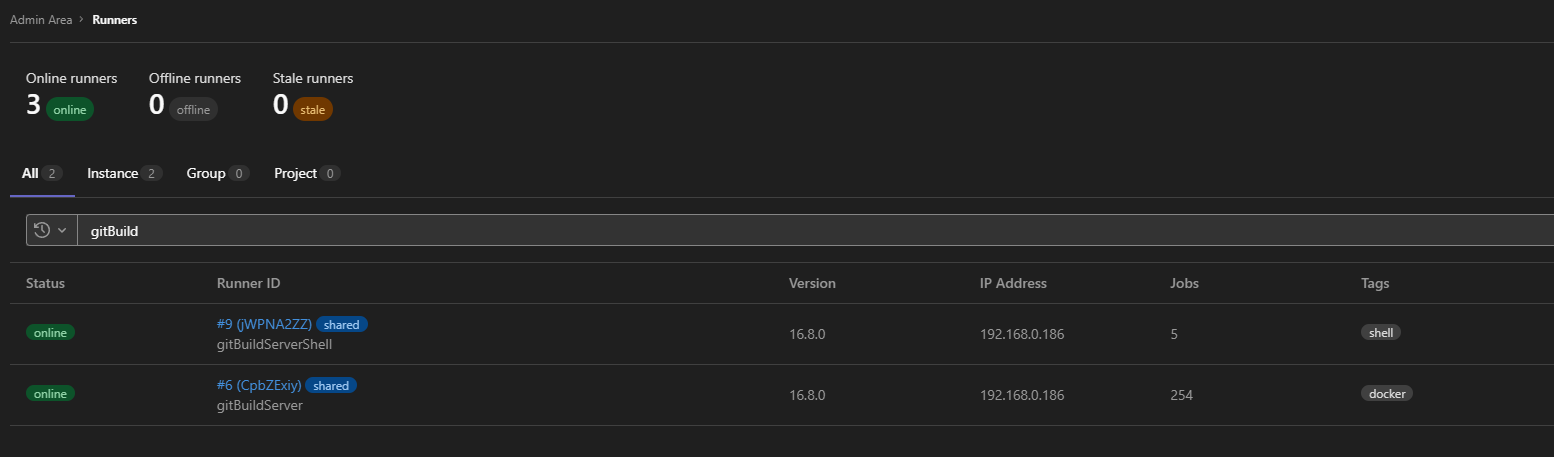

I have a gitlab-runner on a server with to runners, one that has a docker executor and one that has a shell executor.

The create stage builds the docker container from the Dockerfile and pushes it to my private docker registry with the image name blog:latest

The redeploy stage was a bit tricky to figure out since the following documentation for Portainer API and its underlaying Docker API did not specify any recreate endpoint. Ended up reverse engineering the post call done in the Portainer UI. More on that later in the post.

The gitlab-ci.yml file:

stages:

- create

- redepoy

build:

timeout: 1 hours

image: docker:latest

stage: create

tags:

- docker

services:

- name: docker:18-dind

command: ["--tls=false"]

variables:

DOCKER_TLS_CERTDIR: ""

PROJECT_DIR: "/home/gitlab-runner/current-build/"

CI_REGISTRY_USER: "duux"

CI_REGISTRY: "r.bendiksens.net"

CI_REGISTRY_IMAGE: "blog:latest"

script:

- docker login -u "$CI_REGISTRY_USER" -p "$CI_REGISTRY_PASSWORD" $CI_REGISTRY

- docker build --pull -t "$CI_REGISTRY_IMAGE${tag}" .

- docker image tag $CI_REGISTRY_IMAGE${tag} $CI_REGISTRY/$CI_REGISTRY_USER/$CI_REGISTRY_IMAGE${tag}

- docker image push $CI_REGISTRY/$CI_REGISTRY_USER/$CI_REGISTRY_IMAGE${tag}

only:

- master

redepoy:

stage: redepoy

tags:

- shell

script:

- curl http://docker01.bendiksens.net:7050/rc?name=blog

only:

- master

If you don’t have a gitlab-runner yet, you can easily create on a server by doing the following

sudo apt install gitlab-runner

sudo gitlab-runner register

Enter the GitLab instance URL (for example, https://gitlab.com/):

https://git.bendiksens.net/

Enter the registration token:

TOKEN_TOKEN_TOKEN

Enter a description for the runner:

[gitBuildServer]: gitBuildServerShell

Enter tags for the runner (comma-separated):

shell

Enter optional maintenance note for the runner:

<Empty>

Registering runner... succeeded runner=oaDuE-yw

Enter an executor: shell, virtualbox, docker+machine, docker-autoscaler, instance, custom, ssh, parallels, docker, docker-windows, kubernetes:

shell

Runner registered successfully. Feel free to start it, but if it's running already the config should be automatically reloaded!

Configuration (with the authentication token) was saved in "/etc/gitlab-runner/config.toml"

You can run it again to add docker executor. Make sure to change the following:

- Executor

- Tag

- Description

You can find them on the gitlab server as admin under /admin/runners as displayed in the picture below.

Might be good to read the gitlab documentation for the runners and its config changes that can be done in the gitlab-runner config uner

Might be good to read the gitlab documentation for the runners and its config changes that can be done in the gitlab-runner config uner /etc/gitlab-runner/config.toml

Or hit me up if you have any questions :)

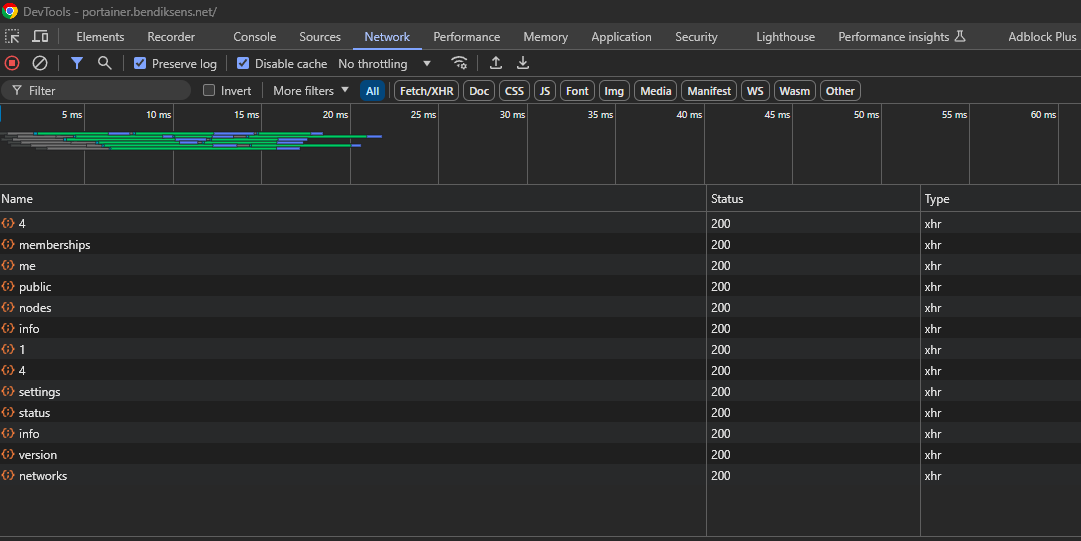

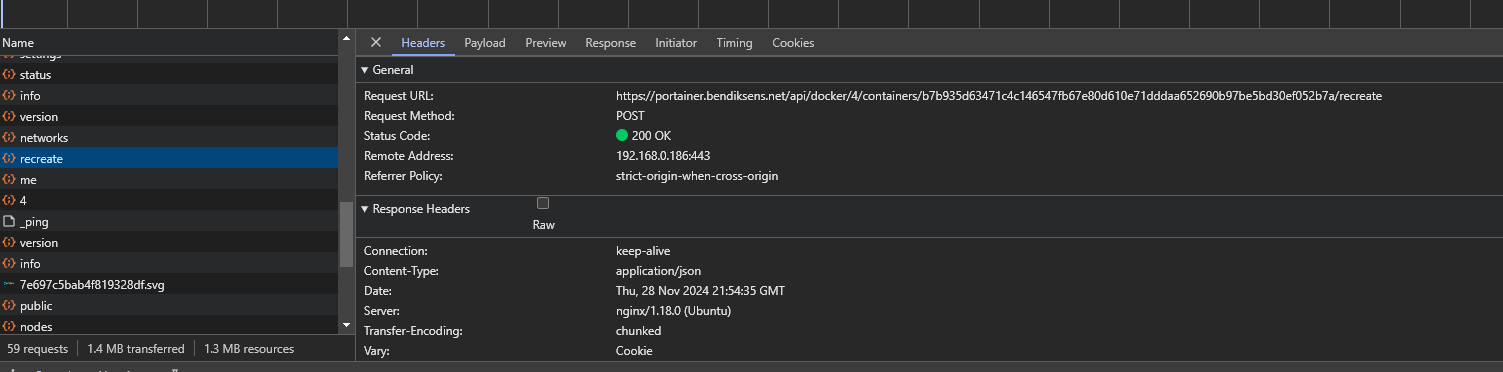

Finding the recreate call from Portainer UI

By clicking on the Recreate button on the container with the developer window open we can look at the network tab as long as we record the traffic been sent from the browser to see what URL is been used and what payload it sends.

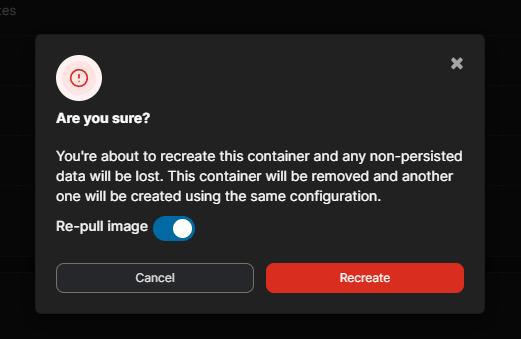

While having the Dev Tools open and ready to intercept we can run the container recreate function and enable the Re-Pull image

In the objects it records you can see a POST that is called recreate this will have the underlaying Docker API endpoint that is connected to the Portainer API.

As you can see the request URL gives us the following URL that we need to use later

https://portainer.bendiksens.net/api/docker/4/containers/b7b935d63471c4c146547fb67e80d610e71dddaa652690b97be5bd30ef052b7a/recreate

If we click on the Payload tab we can see the actual payload it sends with the post.

{"PullImage":true}

Now we know the undocumented function to recreate the container and pull the new image at the same time.

Service to call in the redeploy stage

As you can see in my CI/CD pipeline in the redeploy stage I do a curl call to a internal endpoint i created. Its probably a better way to do this, but this works for me now.

Created a new project folder where i created the following files;

Dockerfile:

FROM python:3.9

EXPOSE 5000

COPY requirements.txt .

RUN python3 -m pip install -r requirements.txt

COPY main.py .

CMD ["python3", "main.py"]

requirements.txt:

flask

requests

main.py:

from flask import Flask, make_response, request

import requests

import json

app = Flask(__name__)

@app.route('/rc', methods=['GET'])

def redeployContainer():

# Get name of container from URL

name = str(request.args.get('name'))

#Auth to portainer

url = "https://portainer.bendiksens.net/api/auth"

payload = json.dumps({

"password": "****************************",

"username": "********************"

})

headers = {

'Content-Type': 'application/json',

}

response = requests.request("POST", url, headers=headers, data=payload)

data = response.json()

try:

token = data["jwt"]

print(token)

except:

print("Did not get token.. harry carry time")

return make_response('{"status": failed auth}', 400)

# locate ID of container to use in URL later based on name

url = "https://portainer.bendiksens.net/api/endpoints/4/docker/containers/json"

payload = {}

headers = {

'Authorization': token

}

response = requests.request("GET", url, headers=headers, data=payload)

data = response.json()

cid = None

results = [{"name": item["Names"][0].strip("/"), "id": item["Id"]} for item in data]

for result in results:

if result['name'] == name:

cid = result['id']

if cid == None:

return make_response('{"cid": None}', 400)

# Recreate based on our findings earlier

url = "https://portainer.bendiksens.net/api/docker/4/containers/" + cid + "/recreate"

payload = json.dumps({

"PullImage": True

})

headers = {

'Authorization': token

}

response = requests.request("POST", url, headers=headers, data=payload)

return make_response('{"recreate": "OK"}', 200)

# main driver function

if __name__ == '__main__':

app.run(host='0.0.0.0')

Once we have this files created we can build it, push it and deploy the service.

WorkStation side:

docker build -t duux/rcService .

docker image tag duux/rcService r.bendiksens.net/duux/rcService:latest

docker image push r.bendiksens.net/duux/rcService:latest

Server side:

docker pull r.bendiksens.net/duux/rcService:latest

docker run -d \

-p 7050:5000 \

--name rcService \

r.bendiksens.net/duux/rcService:latest

So by specifying a container name to this service it will pull and recreate the container and image from my registry. Could have used watchtower here to detect and redeploy, but I wanted it to be snappy instead of waiting for an interval.

http://{IP}:{PORT}/rc?name={containerName}

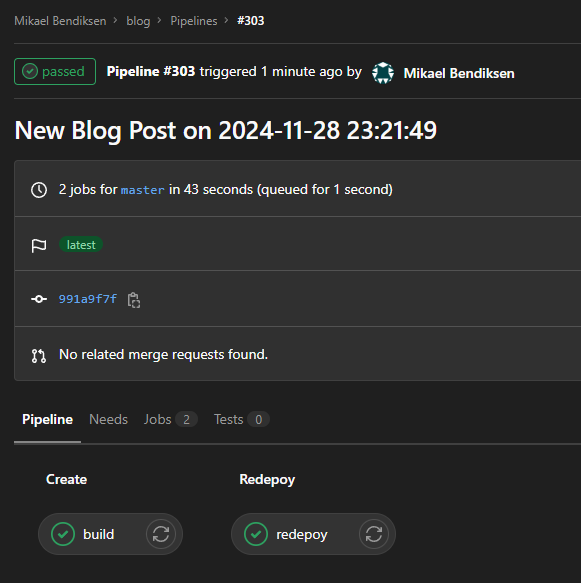

Result

Seems to be working as a charm!

Will prob do some improvements to the setup going forward.

The post you are reading right now is from the image below.

PS D:\Projects\blog> .\deploy.ps1

Syncing posts from Obsidian...

Processing image links in Markdown files...

Markdown files processed and images copied successfully.

Staging changes for Git...

Committing changes...

[master 991a9f7] New Blog Post on 2024-11-28 23:21:49

7 files changed, 293 insertions(+), 10 deletions(-)

create mode 100644 BrainDump/static/images/Pasted image 20241128222258.png

create mode 100644 BrainDump/static/images/Pasted image 20241128225332.png

create mode 100644 BrainDump/static/images/Pasted image 20241128225429.png

create mode 100644 BrainDump/static/images/Pasted image 20241128225704.png

create mode 100644 BrainDump/static/images/Pasted image 20241128231719.png

Deploying to GitHub Master...

Enumerating objects: 22, done.

Counting objects: 100% (22/22), done.

Delta compression using up to 32 threads

Compressing objects: 100% (12/12), done.

Writing objects: 100% (14/14), 215.65 KiB | 2.99 MiB/s, done.

Total 14 (delta 3), reused 0 (delta 0), pack-reused 0 (from 0)

To repo02.bendiksens.net:unk1nd/blog.git

9ed2f86..991a9f7 master -> master

All done! Site synced, processed, committed, built, and waiting for deploy by GIT CI/CD.

Linux Version

Here is a update version of the master script and the image script in one neat python script for using on linux.

#!/usr/bin/env python3

import subprocess

import datetime

import sys

import os

import re

import shutil

def run_command(cmd):

"""Run a shell command and return the CompletedProcess object.

If the command fails, return the exception so you can handle it."""

try:

result = subprocess.run(cmd, shell=True, capture_output=True, text=True, check=True)

return result

except subprocess.CalledProcessError as e:

return e

def main():

# Set variables for Obsidian to Hugo copy.

# Update these paths as needed for your Linux environment.

source_path = "/home/duux/obsidian/Mikael/Blog"

destination_path = "/home/duux/Projects/blog/BrainDump/content/posts"

posts_dir = r"BrainDump/content/posts"

attachments_dir = r"/home/duux/obsidian/Mikael/attachments"

static_images_dir = r"BrainDump/static/images"

# Use rsync to mirror the directories.

rsync_options = "-avz --delete"

rsync_command = f"rsync {rsync_options} {source_path}/ {destination_path}/"

print("Mirroring directories using rsync...")

rsync_result = run_command(rsync_command)

if isinstance(rsync_result, subprocess.CalledProcessError):

print("Error during directory mirroring:")

print(rsync_result.stderr)

sys.exit(1)

else:

print("Directory mirroring completed successfully.")

for filename in os.listdir(posts_dir):

if filename.endswith(".md"):

filepath = os.path.join(posts_dir, filename)

with open(filepath, "r", encoding="utf-8") as file:

content = file.read()

# Step 2: Find all image links in the format

images = re.findall(r'\[\[([^]]*\.(?:png|jpg))\]\]', content)

# Step 3: Replace image links and ensure URLs are correctly formatted

for image in images:

# Prepare the Markdown-compatible link with %20 replacing spaces

markdown_image = f"[Image Description](/images/{image.replace(' ', '%20')})"

content = content.replace(f"[[{image}]]", markdown_image)

# Step 4: Copy the image to the Hugo static/images directory if it exists

image_source = os.path.join(attachments_dir, image)

if os.path.exists(image_source):

shutil.copy(image_source, static_images_dir)

# Step 5: Write the updated content back to the markdown file

with open(filepath, "w", encoding="utf-8") as file:

file.write(content)

# Add changes to Git.

print("Staging changes for Git...")

status_result = run_command("git status --porcelain")

if status_result.stdout.strip() == "":

print("No changes to stage.")

else:

run_command("git add .")

# Commit changes with a dynamic message.

commit_message = "New Blog Post on " + datetime.datetime.now().strftime("%Y-%m-%d %H:%M:%S")

diff_result = run_command("git diff --cached --name-only")

if diff_result.stdout.strip() == "":

print("No changes to commit.")

else:

print("Committing changes...")

commit_result = run_command(f'git commit -m "{commit_message}"')

if isinstance(commit_result, subprocess.CalledProcessError):

print("Error committing changes:")

print(commit_result.stderr)

sys.exit(1)

# Push all changes to the main branch.

print("Deploying to GitHub Master...")

push_result = run_command("git push origin master")

if isinstance(push_result, subprocess.CalledProcessError):

print("Failed to push to Master branch:")

print(push_result.stderr)

sys.exit(1)

print("All done! Site synced, processed, committed, built, and waiting for deploy by GIT CI/CD.")

if __name__ == "__main__":

main()